ML-Powered Anti-collision Driving Assistant

Project Overview:

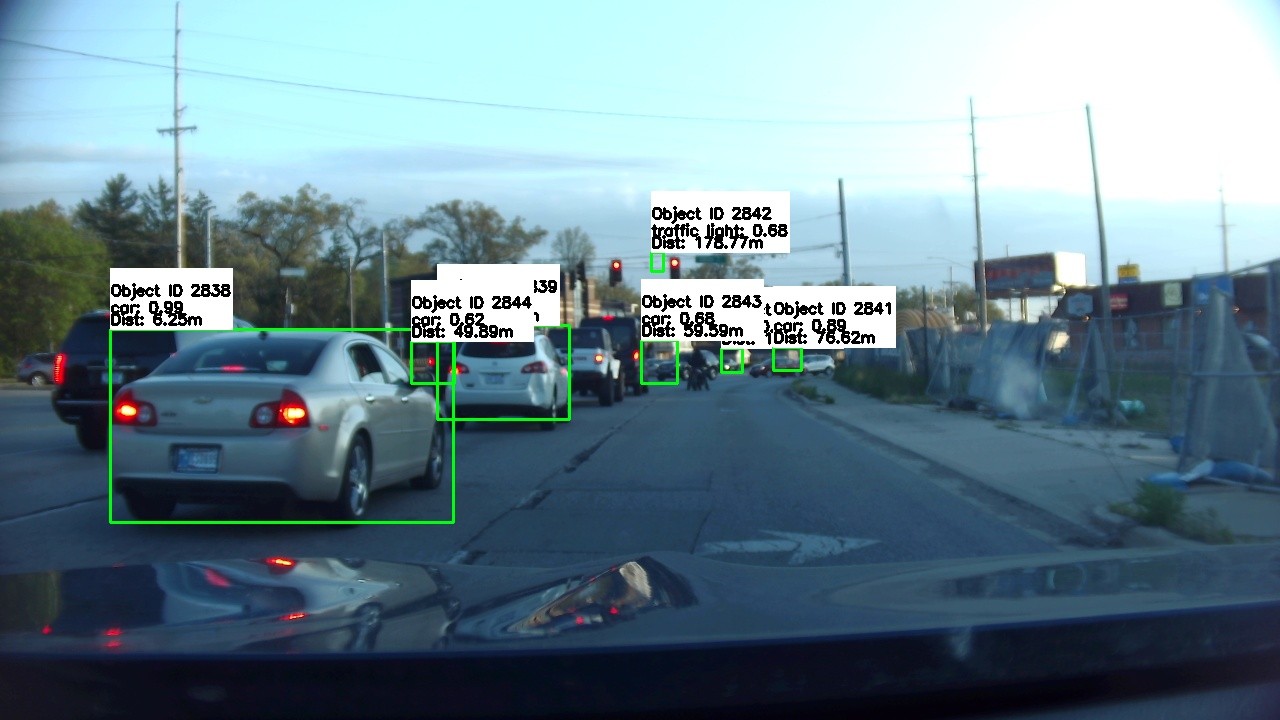

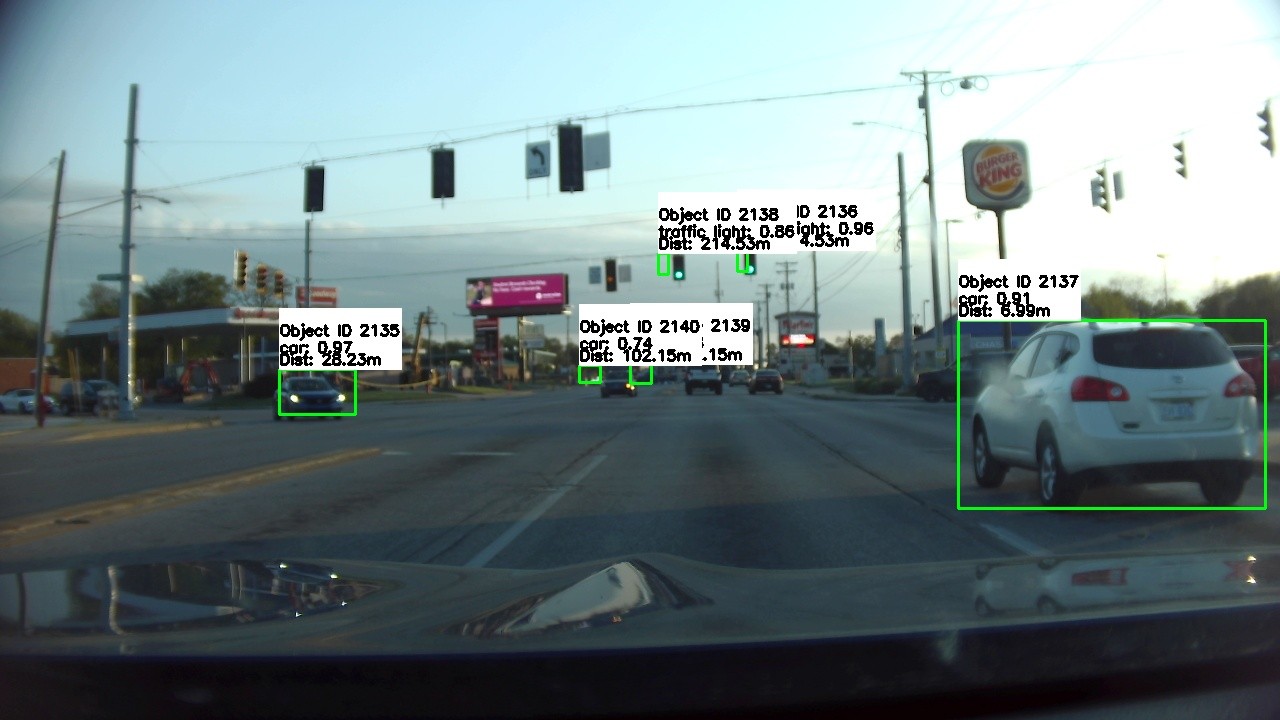

For our capstone project, me and 2 other team members set out to develop a machine learning based car sensor system that uses a camera to enhance road safety by detecting potential hazards on the road. This system aims to provide timely and accurate alerts to drivers by utilizing advanced object detection algorithms. By detecting potential hazards on the road, such as vehicles, pedestrians, and road signs, the system can alert drivers to imminent dangers, allowing for proactive rather than reactive driving.

Our final approach to developing the machine learning-powered dashboard camera system was systematic and focused on achieving high-performance, real-time object detection tailored for automotive safety. Here's an overview of the systematic approach we employed:

The approach for the system involved several key components:

• Hardware Integration: Configuring the Raspberry Pi to interface with a high-definition dash camera and peripheral alert systems (LEDs and speakers).

• Software Development: Developing Python scripts to handle real-time video processing, object detection using TensorFlow Lite, and trigger mechanisms for alerts.

• Model Deployment: The TensorFlow Lite model of Quantized MobileNet V2 was deployed after being fine-tuned on the BDD-100K dataset to recognize and predict objects with high accuracy and speed, it was trained on 40k steps.

Hardware components

Raspberry PI: Responsible for processing the input from the USB camera, running the object detection algorithm, and controlling the output signals to the speaker and LEDs.

USB Camera: Attached to the Raspberry Pi, this camera captures live video footage of the road. The video feed is then processed by the Raspberry PI to detect objects such as cars, traffic signs, traffic lights and pedestrians.

Speaker: This component is used to alert the user audibly when an object is detected. Depending on the object type, different sounds are played.

LEDs on Breadboard:

Red LED: Lights up when a car is detected.

Blue LED: Indicates the detection of a traffic sign or traffic light.

Yellow LED: Activated when a pedestrian is detected.

Green LED: Shows that the detection system is actively running and monitoring the road.

Power Bank: Provides power to the Raspberry PI. This makes the system portable and not dependent on a fixed power source, allowing for flexibility in testing and deployment.

Input is taken from the USB camera as a VideoStream, then it is processed by the MobileNetV2 model and given a proper class distinction, this happens each frame. If a class is detected the designated sound and light for said class gets activated.

4. Project Outcome

Our project successfully resulted in the development of a fully functional machine learning-powered dashboard camera system designed to enhance road safety. Our system utilizes a streamlined Quantized MobileNet V2 model, trained on the COCO dataset and fine-tuned on the BDD-100K dataset, to detect objects within a 120-meter range. It provides timely alerts to drivers using audio (warning sounds) and visual (LED indicators) cues.

Project can be found on Github: https://github.com/design-smith/AI-Powered-Anti-collision-Driving-Assistant.git

Key Features and Outputs:

Real-Time Object Detection: The dashboard camera processes video input at a consistent 30 FPS, ensuring smooth performance in dynamic road environments. It can detect vehicles, pedestrians, and road signs with a mean average precision (mAP) of 70-75%.

Visual and Audio Alerts: The system is equipped with audio speakers that emit warning sounds and LED indicators that provide visual signals when potential hazards are detected. These alerts are designed to be intuitive and easily noticeable, ensuring that drivers can react promptly to avoid accidents.

Audio Alerts: Equipped with a speaker to emit warning sounds when potential hazards are detected.

Low Latency and High Detection Range: With an end-to-end latency of less than 200 milliseconds, the system quickly processes images and delivers alerts, giving drivers adequate time to respond. The detection range extends up to 120 meters, offering advanced warning of potential hazards ahead.

High Detection Range: Capable of detecting objects up to 120 meters away.

Tech stack

This project was made entirely in python.